Google search algorithm governs the ranking of pages. The search algorithm ensure the pages ranked in such a way that only relevant content is available to user. How Google uses the search algorithm to rank pages? In this article I will try to answer all your questions.

What is Google search algorithm?

As mentioned above, Google search algorithm make the best results to appear on top of search results. So as you may know about algorithms that it is logically defined set of rules & steps which are required to be followed to undergo any process or transaction. Google search algorithm also work in a same manner. In order to give out the best results from a search index for any query, Google has a complex system. This complex system contains a huge set of rules and instructions which Google follows while delivering the results.

But Google never reveal the exact algorithm in public. But there is a strong possibility that it may have to reveal those secrets because of the ultimatum given by UK courts. Actually Google is involved in a lawsuit (dispute) against a company named Foundem.

Okay, Lets come back to our subject, So Google regularly bring hundreds of modifications to the algorithm affecting Search Engine Result Pages (SERPs). The objective behind these modifications is to deliver the best SERPs.

Pre-requisite terms:

- Latent Semantic Analysis: Also known as Latent Semantic Indexing. This technique tries to analyse and identify the relationship between the words used in web pages of website. The whole idea is based upon an assumption. According to this assumption, similar word appears in semantically similar part of a website.

- Keyword Stuffing: The word spamming technique used to have an unfair advantage in ranking. With the use of this technique, website owner or developer or even digital marketer tries to spam a website with keywords by using them everywhere like in visible content, links, Meta data etc. Nowadays search engine can easily identify keyword stuffing. A search engine can even ban a website due to Keyword stuffing technique. The ideal practice is to focus only on one keyword per web page or maximum of two.

- Inbound links: The incoming links to your webpage from another website on the internet, Also called backlinks.

- Black-Hat SEO: In Black Hat SEO technique, false practices like keyword stuffing, Hidden or invisible texts, Link Spamming etc to manipulate the search engine index.

All the major Google updates are discussed below

1. Brandy Google update: Released in 2004

The major Google search algorithm update typically focusing on the concept of latent semantic analysis. This update became a turning point as no ranking algorithm got affected but it just modified the index of Google. With this change, Google tried to shift the focus on intent. The dynamically generated links ranked better. The anchor text became an important parameter. These links helped Google to look at all other pages of a website for evaluating if those pages also contain the queries or search terms.

For example, Let us suppose a 3 page website has pages named Home, About & contact of which only homepage is indexed. Now Home page has a anchor text for the site name which is also a keyword and that anchor is an internal link to about us page. With a brandy update, this homepage which is indexed, will help search engine to know about the ‘about’ page as well.

2. Panda Google update: Released in 2011

The panda update helped search engine to rank pages according to their quality. Panda update affected a large number of websites (more than 12%). Basically Panda tries to give a quality score to websites. Till 2015 it was just an update but in 2016, Google officially incorporated it into its algorithm. Any website containing duplicate or thin content ranked lower. On the other hand, a website with unique and relevant content is ranked higher.

Panda also resulted in sudden increase in number of complaints due to copyright infringement because the original content was getting ranked lower than relevant ones. Also it became easy for Google to identify the spammers. With panda update, the intent based quality searching revolutionised SEO.

Duplicate content, Thin content, Inbound links, Quality of content, The ratio of inbound links to that of queries all these factors play an important role in ranking.

3. Penguin Google Algorithm: Released in 2012

Penguin officially became a part of core algorithm of Google in 2017, with an objective to identify websites with Black-Hat SEO practices and rank them low. When penguin came out, more than 3% websites on the internet were affected. The practices targeted were mainly paid backlinks and keyword stuffing.

With penguin, the inbound links coming from unrelated low quality websites became the major factor for triggers. The objective of penguin was to make websites follow search engine webmaster guidelines. If in case a website fails to do so, it must be penalized.

4. Hummingbird Google update: Released in 2013

Hummingbird came as a personal search algorithm, which is completely based on user intent. With hummingbird, Google aimed to give results that is based on intention of users. Also it introduced the concept of local search, knowledge graph and voice search. Let us discuss the problem before hummingbird.

Suppose you type a keyword ‘movie’, the results of this query are exactly the same as of another keyword ‘movie making course’. There arises a huge problem of intent to which hummingbird became a solution.

Local SEO is location based SEO that uses ‘Google My Business’ results to show SERPs for any nearby location. Due to local SEO, user may see different result for a same query. For example 2 User, one in central Delhi and other in west Delhi surely get different results for same query like ‘Clubs near me’.

Knowledge graph is like a graph based detailed fact page for any company or business or any query.

Also with voice search, Google adopted NLP (Natural Language Processing) technique to improve the search engine to a huge extent.

5. Pigeon Google update: Released in 2014

Since local SEO already came out with hummingbird, Pigeon updates aimed to improve the local search. So when it comes to local search, Google My Business has a role to play. So, Pigeon tries to display the SERPs on the basis of location and distance i.e. the nearest Google My Business Page results. The pigeon algorithm impacted local businesses to a huge extent. Now people were using directions to find businesses which boomed many small and large scale businesses.

6. Mobile Google Algorithm: Released in 2015

Mobile update named Mobilegeddon. With Mobile first algorithm update, Google ensured that mobile friendly pages should rank better than non-mobile friendly pages. With digitalisation Google knows that more than 60% of people are using it on mobile devices. So when it comes to relevant results, SERPs should be displaying the result pages optimized for mobile device so that users can have a better experience. Also it affected search rankings only for mobile devices in such a way that instead of a complete website, web pages should only be affected. This update increased a demand for AMP pages enabled websites. AMP is Accelerated Mobile Pages.

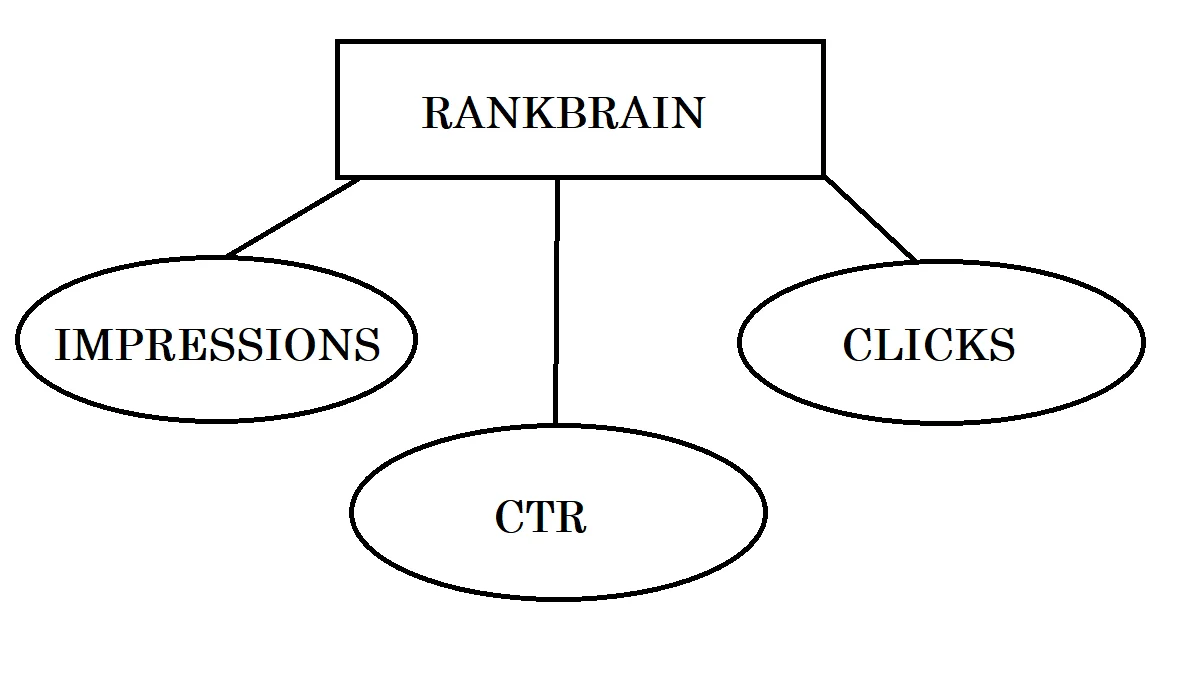

7. RankBrain Google Algorithm: Released in 2015

Google completely adopted Machine Learning with RankBrain algorithm. The quality of content got a new metrics which later became the most important focus areas. Even Google consider RankBrain as the 3rd most essential criteria to rank pages as it aims at relevancy. UI experiences, call to actions and retention & bounce rates helped Google to rank SERPs accordingly. Suppose a query ‘How to make money online’ brought 40 SERPs, among them the first few results would be those with best CTRs, low bounce rate and high retention time. (CTR is click-through rate. The clicks per impression metrics)

Conclusion: RankBrain is an reputation based algorithm. According to which the most reputed sites are ranked higher.

8. Possum Google update: Released in 2016

Possum is also a location based algorithm but with an exception. It filter the results. Since local search mostly deals with Google My Business Pages. There comes a twist, a shopping complex have more than 10 businesses of same domain. Which businesses should appear? Possum tried to solve the issue by optimising the results.

The issue arises as only 2-3 of them are visible which may or may not be the best results for user. Now when we talk about the exception, It not only optimise the nearby results but also helps to give SERPs for different physical locations. If we discuss about the situation before possum, Custom physical locations didn’t showed results of nearby Google My Business Pages. Possum made it possible. It improved the location based SEO results.

9. Fred Google update: Released in 2017

Fred is one of those updates which basically tried to target the false practices for aggressive monetization benefits. These false practices include a huge number of advertisements, low-quality content, redirections, affiliate without value driven content etc.

Actually by 2017, Google realized that Blogging is dominating the market in terms of revenue generation which is why more people are shifting to it. There has to be a certain criteria to help quality bloggers to gain and black hat SEO tricksters should be penalized.

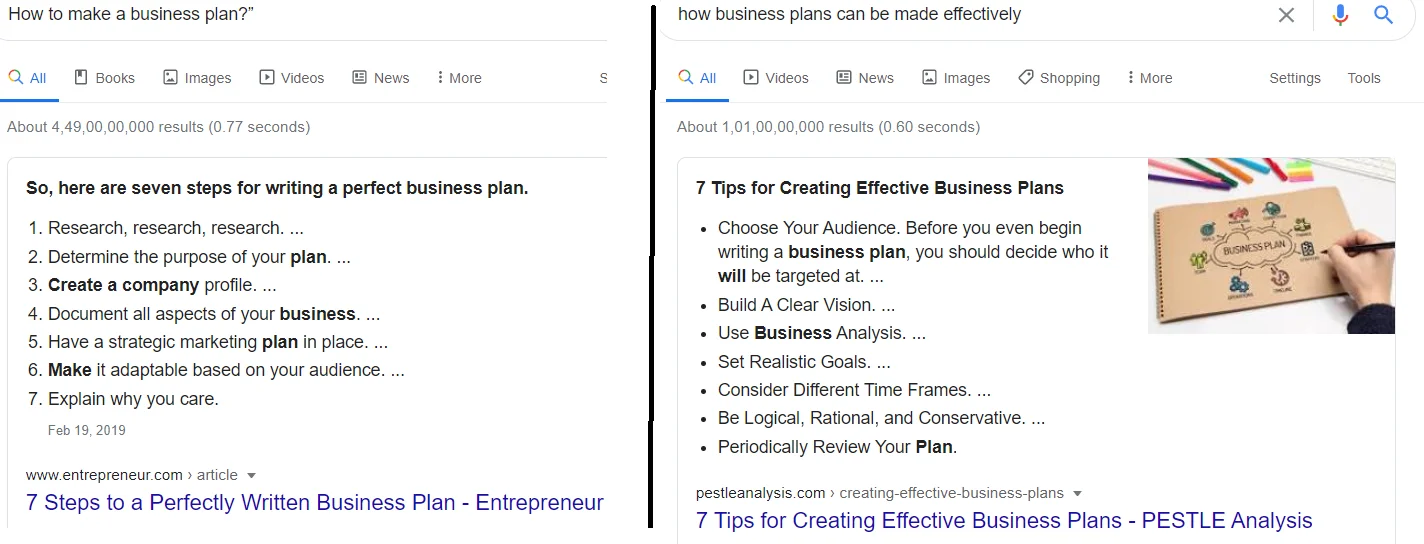

10. BERT Google update: Released in 2019

BERT Bidirectional Encoder Representations from Transformers, an update that affected a huge number of around 10% results. This is regarded as one of the most dominant update after RankBrain. Since Natural Language Processing was adopted by Google, with this update Google tried to implement Artificial intelligence in a more improved manner. The objective was to understand the context of queries which depends on the type of phrase searched.

For example let us consider a user searches “How to make a business plan?” and then “how business plans can be made effectively?”

To this example, Google with BERT will have a more relevant result to both these queries. And the fact is, even after being enough relatable, search engine will try to understand the context to what a user is searching along with its intention.

Therefore we can say that the focus which turned from keywords to intent was now shifted to context with intent.

11. Broad-core Update January 2020

EAT Model: expertise, authoritativeness, and trustworthiness (E-A-T)

The idea of this update was to give the new content an opportunity to compete with old content r=that was already ranking on google and get ranked over if the new content is better than the previous one. Let us take an example, Suppose a teacher publishes a book on trigonometry having the top 100 common formulas for exam till 2020, because of quality it was the most-sold book of that year. Now somehow exam authority changes exam patterns and question patterns and a new author now publishes the top 100 formulas according to that pattern and syllabus. Does that mean the old book was irrelevant or poor? No, it was the best book of that time but with time, the new book had to take over for the betterment of students.

12. Passege based Indexing algorithm (February 2021)

Passage-Based Indexing is a search algorithm update that Google introduced in February 2021. This update is designed to improve the accuracy of search results by analyzing and indexing individual passages of text within webpages, rather than the entire page. This means that when someone enters a query into Google, the search engine can now analyze each passage on a webpage and identify which passages are most relevant to the query, rather than just the entire webpage.

Here is an example of how Passage-Based Indexing works:

Suppose someone searches for “how to train a puppy.” In the past, Google would return webpages that were relevant to the entire query, meaning that the entire webpage would need to be focused on training puppies. However, with Passage-Based Indexing, Google can now analyze individual passages on each webpage and identify which passages are most relevant to the query.

For example, if there is a webpage that includes a passage on “how to train a puppy to sit,” Google can now identify that specific passage as being highly relevant to the query “how to train a puppy.” This means that the webpage can now appear in search results, even if the entire webpage is not entirely focused on training puppies.

This update can be especially beneficial for long-form content, where there may be multiple passages that are relevant to a specific query. It can also be helpful for queries that have multiple interpretations or meanings, where specific passages can clarify the intended meaning of the query.

However, it’s worth noting that Passage-Based Indexing is not a replacement for traditional ranking factors like content quality, relevance, and authority. Rather, it is a complementary factor that can help improve the accuracy of search results by identifying and indexing the most relevant passages on each webpage.

Overall, Passage-Based Indexing is a significant update to Google’s search algorithm that has the potential to improve the accuracy and relevance of search results. By analyzing individual passages on each webpage, Google can now better understand the context and relevance of each page, leading to more accurate and helpful search results for users.

That’s how this algorithm works, as per the context of that time, new content if better than previous one, should rank above the old content.

We have discussed all the important and major updates and changes. Hope you will love it.

Want to know about how search engine work?